Why skills beat tools in 2025

AI features change weekly; fundamentals don’t. This guide focuses on ai survival skills that Indian CA and finance teams can apply immediately. When a UI shifts or a model updates, tip-and-trick teams lose momentum. Teams that master how to ask, verify, automate, decide, and package keep output quality steady and switch tools without drama. This guide is built for Indian finance and SME work so you can apply it to GST, TDS, month-end close, vendor management, or product operations. You will see one repeating pattern: frame the task → supply data → set constraints → demand structured output → verify → chain steps → apply domain rules → present a decision. As per my view, following this loop gives fewer rewrites, fewer errors, and faster cycle time.

To keep it concrete, every ai survival skills below includes plain steps, a full-sentence example, and a short checklist. Read once, then pick one workflow and implement it this week. You will have a cleaner process, measurable time saved, and a small library you can reuse. In my view, these ai survival skills give ai skills for accountants a practical path to results in Indian finance teams. Let’s begin where every good result starts: the ask.

New to structured prompting? Read AI Prompt Engineering in 2025 for a quick mindset shift.

Quick Summary

- These ai survival skills help Indian CA/finance teams ship results despite tool changes.

- Do four things well: prompt strategy, data verification, no-code workflow automation for finance, and domain judgment, then package decisions cleanly.

- Add guardrails with AI governance India, measure ROI monthly, and save reusable kits.

- Implement one workflow this week (GST/TDS/month-end/vendor ageing) and track hours saved.

1) Prompt Strategy & Problem Framing (AI Survival Skills)

A vague ask creates vague output. In my view, a prompt should read like a mini-brief that defines the role, the task, the data, the constraints, and the format, with acceptance criteria and one short self-review loop. Clear briefs cut rewrites and keep results stable when tools change—core ai survival skills and ai skills for accountants.

Use these four steps to structure your prompt clearly:

- State the Role, Task, Data, Constraints, and Format in one short block so the model receives a complete brief.

- Add acceptance criteria in a single sentence so the model understands how success will be judged.

- Request a structured format (CSV, JSON, or a table) so you avoid manual cleanup later.

- Ask for a self-critique pass before the final draft so obvious gaps are fixed by the model itself.

Example : “Act as a finance analyst for an Indian SME. Draft a factual vendor email that summarises GST mismatches for Apr–Jun 2025. Use fields: Invoice_No, Date in dd-mm-yyyy, GSTIN, Taxable_Value, GST_Paid, and GST_Due. Flag any variance above ₹500. Return a five-sentence email and a CSV with the same columns plus Variance and Flag.”

Checklist: constraints stated • acceptance criteria included • structured output requested • one critique loop completed.

Next: A good ask still fails if the data is wrong; verify the numbers next.

For a step-by-step brief you can copy, see How to Use ChatGPT Like a Pro in 2025.

2) Data Verification (prove the numbers)

Data Verification” is the small, repeatable routine you run before any automation, emails, or decisions go out. AI can format numbers; only you can prove them. As per my view, data verification must live in the same sheet or document so anyone can see what you checked and when.

Apply below five step data verification checks before you trust any figure:

- Compare totals to a control or ledger and record which source you matched for traceability.

- Enforce ranges and formats so dates stay in period and amounts are not negative unless intended.

- Detect duplicates by checking repeated Invoice_No and GSTIN pairs within the same period.

- Sample five to ten rows and match them against PDFs or ERP exports to confirm accuracy.

- Confirm units and labels so you do not mix rupees and thousands or dd-mm-yyyy and mm-dd-yyyy.

Example: Add a Variance column with =ABS(GST_Paid – GST_Due) and a Flag column with =IF(Variance>500,”YES”,”NO”). Highlight duplicates using a rule that flags COUNTIFS(Invoice_No,@Invoice_No,GSTIN,@GSTIN)>1. Write a single audit line at the top: “Totals match ledger; six rows sampled to PDFs; date format enforced across all rows.”

Data verification Checklist:

- Totals match the ledger. Write the exact ledger/control source you matched.

- Dates are within the reporting period and formatted dd-mm-yyyy. Amounts are non-negative unless intended.

- No duplicates exist for the Invoice_No + GSTIN pair within the period.

- A sample of 5–10 rows was checked against PDFs/ERP exports with no mismatches.

- Units and labels are consistent (₹ vs thousands; one date format only) and dated audit note added.

Next: Use only rows where Verified = YES (and, if relevant, Flag = YES) for automation—emails, merges, and reports.

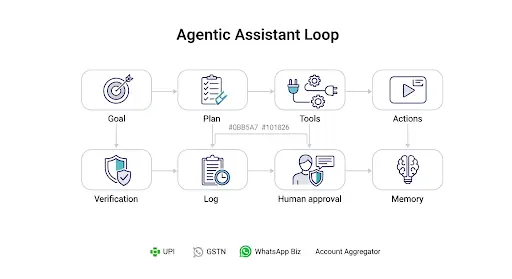

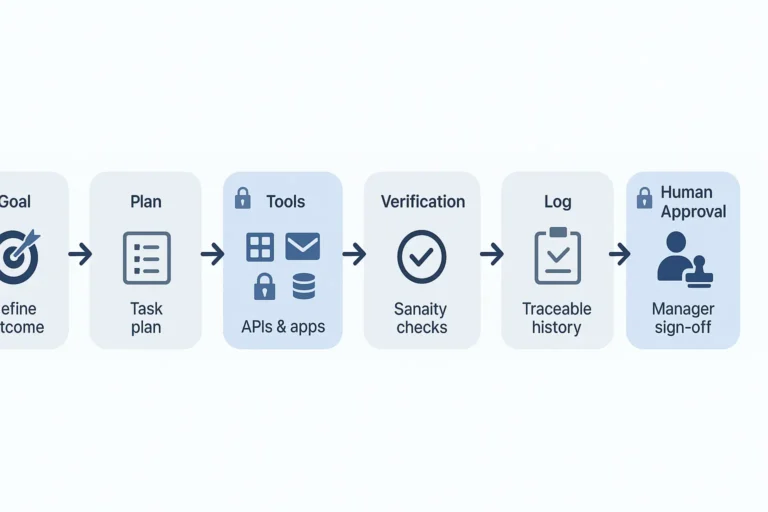

3) No-Code Workflow Automation for Finance

Workflow is a simple, visible chain that moves clean data from the model to Sheets/Docs/Email with one human review gate. Automate the middle of the process, not judgment. As per my opinion, a visible chain that pauses for one human review gives consistency and speed without losing control.

Build the chain in this order and keep each hop observable:

- Generate the table and flags in Sheets or Excel so every row is traceable to its source.

- Fill a summary template in Docs with core numbers and one next step so drafting time drops.

- Create email drafts with placeholders for vendor name, invoice list, and amounts so sending is accurate.

- Record a status log with Sent, Date, and Owner so follow-ups are clear and auditable.

Example : Import the ageing report into Sheets. Use your prompt to prepare three email patterns for the 0–30, 31–60, and 61–90-day buckets. Create drafts that pull vendor details directly from the sheet. A reviewer edits and sends, and a script writes Sent, Date, and Owner back to a log. Saving twelve minutes per vendor across forty vendors yields about eight hours per month.

Checklist: trigger defined • reviewer named • status log captured • ROI noted in the SOP.

Next: Speed only helps when decisions are correct—add domain judgment now.

Choose features that actually help the chain—start with GPT-5 features you should actually use in 2025.

4) Domain Judgment with PEER (decisions you can sign)

This is a decision frame (Purpose, Evidence, Exceptions, Recommendation) that turns model text into advice you can sign. Models can sound right when they are wrong. In my view, your moat is domain logic—the core rule, the exception, and the safer recommendation. The PEER frame turns AI text into decisions you can sign.

Complete these four boxes before you recommend anything:

- Write the Purpose in one sentence so the business outcome is explicit (tax saved, risk reduced, or time saved).

- List the Evidence (slabs, proofs, dates, and verified figures) so the basis is clear to reviewers.

- State the Exceptions that change the answer so edge cases are visible and testable.

- Give a Recommendation with an owner and a date so the action is specific and executable.

Example – “Purpose: maximise deduction for a salaried employee in AY 2026–27. Evidence: Section 80C is fully used at ₹1,50,000 and NPS Tier I is permitted. Exceptions: Section 80CCD(1B) allows an extra ₹50,000 for NPS Tier I; employer NPS under 80CCD(2) is separate; Form 12BB is required. Recommendation: contribute ₹50,000 to NPS Tier I under 80CCD(1B); the employee provides proof by 05-Oct; payroll applies the change in the October run.”

Checklist: PEER complete • exception explicit • one-line citation present • action/owner/date set.

Next: A correct decision still fails if people cannot act; package it clearly.

5) Communication & Packaging (make action obvious)

This is the way you present results so busy stakeholders can act fast. Stakeholders want outcomes, numbers, dates, and owners. As per my view, formatting for action removes ambiguity and speeds execution.

Use this three-part pack on every deliverable:

- Write a five-line executive summary that states the goal, the key finding, the ₹ impact, the risk, and the next step.

- Put facts in an evidence table so numbers are easy to scan, compare, and verify.

- Create a next-steps checklist that uses verbs, owners, and due dates so responsibilities are explicit.

Example- Goal: close GST mismatches for Apr–Jun 2025. Key finding: three invoices show a total variance of ₹590. Risk: filing delay with low interest exposure at this amount. Next step: request vendor documents and confirm values in accounts payable. ETA: 08-Oct-2025.” The checklist reads: “AP confirms three invoices by 05-Oct; Vendor shares a debit note by 08-Oct; FinOps updates the ledger by 09-Oct.” Add a header such as v1.2 — 27-Sep-2025 10:30 IST.

Checklist: summary present • numbers in a table • CTAs with verbs • version/time stamp added.

Next: Before you scale, keep work inside guardrails with policy.

6) AI Governance India (DPDP-aware policy, practical)

We should have a one-page policy that defines allowed, review, and restricted uses of AI for your org. Move fast within policy. As per my view, a one-page document is more likely to be used than a long manual, and it should map to India’s DPDP Act and your client NDAs.

Write the policy with these four blocks and make them visible:

- Mark Allowed work as public or information-only tasks in approved tools so routine use is simple.

- Mark Review work as internal non-PII tasks that need manager sign-off so risk stays controlled.

- Mark Restricted work as client or PII tasks that require approved private tools, anonymisation or encryption, a usage log, and a scheduled deletion.

- Set Retention as a 90-day default purge unless contract or law requires longer.

Example: Classify each task as Tier A, B, or C. Tier A proceeds without extra steps. Tier B requires manager review. Tier C requires DPO or lead approval, approved private tools, a log entry, and a deletion date. Maintain a tool registry listing each tool, the owner, the purpose, and the last review.

Checklist: data class marked • approval captured • usage logged • deletion scheduled.

Next: Guardrails in place—measure quality and ROI so improvements continue.

7) Evaluation & ROI (make quality visible)

Quality improves when success is defined and reported. As per my opinion, scoring next to the work and publishing a monthly trend keeps teams honest and leaders supportive.

Apply this simple scoring model and keep it updated:

- Score Accuracy, Completeness, Timeliness, and Tone from 1 to 5 so quality is visible in one glance.

- Re-run a golden set of twenty past cases after any prompt or model change so regressions are caught early.

- Tag defects as math, date, policy, or format and record the fix so learning accumulates and repeats.

- Report hours saved per month or days faster to close so impact is expressed in business terms.

Example: After adding CSV output to the prompt, the month-end memo reached accuracy 4.6/5 and saved eight hours per month. Two defects were fixed: a date mismatch and a missing note. The trend line appears in the team report.

Checklist: rubric filled • golden set run • defects tagged • ROI line added.

Next: When a method works, save and reuse it so nobody starts from zero.

8) Reuse Library (don’t start from zero)

It is a set of named kits (prompts, templates, sample data, checklists) that stop people starting from a blank page. Reuse is a quiet multiplier. In my view, named kits prevent rework and make onboarding faster.

Build the library with these elements and simple naming:

- Store prompt files, a short style guide, and a data dictionary so outputs stay consistent across people.

- Keep templates for PEER memos, SOP one-pagers, checklists, and emails so drafting takes minutes, not hours.

- Use versioned names such as GST-reco_prompt_v2_2025-09-27.md so changes are obvious at a glance.

- Add tags and owners (Close/GST/TDS) so people can find the right kit and know who maintains it.

Example: Create a “GST reconciliation kit” that contains the Skill-1 prompt, the Skill-2 verification checklist and sample CSV, the Skill-5 email template, and a PEER memo template. Assign an owner and set a monthly review date. New staff start with the kit instead of a blank page.

Checklist: owner named • version/date set • tags added • monthly review booked.

Next: You now have a repeatable loop; apply it to one workflow this week.

Grab the AI Model Selection Flowchart + Prompt Pack (free) to seed your kit.

Points to Remember

Based on the above, please remember the below key points for AI survival skills.

- Prompts are mini-briefs that must include constraints and format.

- Numbers and dates must be verified before anything leaves your desk.

- Only repeatable steps should be automated, and one human review should remain.

- The PEER frame converts AI text into a decision that stands up to review.

- A one-page policy enables speed while limiting risk under AI governance India.

- A simple score and a monthly trend keep quality improving.

- Versioned kits prevent rework and make onboarding faster.

Conclusion

In my view, you do not need a new tool every week to benefit from AI; you need a repeatable way to deliver results. Start with one workflow—vendor ageing emails, GST reconciliation, or month-end close. Apply the eight AI survival skills in order: prompt strategy, data verification, no-code workflow automation for finance, domain judgment, communication, AI governance India, evaluation, and reuse. Ship a clean deliverable, record hours saved, and save your PEER memo template and prompts to the library. As you repeat the loop, quality improves, cycle time drops, and risk stays contained. That is how small finance and CA teams turn AI skills for accountants into steady, compounding advantage.

FAQ- AI Survival Skills

Which tools are enough to start?

A reliable chat model, Google Sheets or Excel, Google Docs, and Gmail or Outlook are sufficient. Add light automation after the manual flow is stable.

How do I reduce hallucinations?

Provide source data, set constraints, request structured output, and run the five verification checks. Keep a human review before anything leaves the company.

Can I upload client data to AI tools?

Only if policy allows it. Prefer anonymised samples, use approved private tools, log usage, and set a deletion date.

How do I show impact to managers?

Report hours saved per month, rubric scores, and one before/after example tied to ₹ or cycle time. Include a one-line ROI in each memo.

What if public models are banned?

Use approved private models, or apply the same prompt patterns offline with manual review until policy changes.

When should I automate and when should a human decide?

Automate drafting, formatting, and lookups; keep human sign-off for legal, tax, money-movement, and client-facing commitments.

What is PEER and when do I use it?

PEER = Purpose, Evidence, Exceptions, Recommendation. Use it after verification to turn model text into a one-page decision with owner and due date.

How often should I update prompts and SOPs?

Re-run your golden set monthly and after any model change; update the prompt/SOP only when accuracy or time-to-close shifts.

Disclaimer

Educational content only; not legal, tax, or professional advice. Verify calculations and compliance with current law and your organisation’s policies before use.

Related Reading

- AI Prompt Engineering in 2025

- How to Use ChatGPT Like a Pro in 2025

- GPT-5 Features You Should Actually Use in 2025

External References

- NIST AI Risk Management Framework 1.0 — governance & evaluation guidance.

https://www.nist.gov/itl/ai-risk-management-framework - Digital Personal Data Protection Act, 2023 (India) — policy and retention basis.

https://www.meity.gov.in/ - GST Portal (Government of India) — primary reference for GST rules/forms.

https://www.gst.gov.in/